Published on

Over the last few months at ChalkCast, we've been developing real-time, GPU-accelerated video filters for students and educators on our virtual classroom platform.

Whether using online, in-person, or hybrid methods, teachers are looking for ways to keep their students engaged. When the COVID-19 pandemic brought the classroom into millions of students' homes, student engagement became significantly more challenging. Many educators today are continuing to teach to a class of 30+ muted and blank video feeds.

Of course, many students have a reason for turning off their videos: they are embarrassed by their home environments. Students who come from low-income backgrounds often don't want to show their living situation. Other students may share tight living spaces with multiple family members and their environment would be distracting to display.

For those who have had to experience virtual learning through the pandemic, they can feel exhausted when constantly having to pose in front of a camera. Online learning, at its worst, can be an environment that causes great social fatigue with limited positive social cues and rewards that you would find in an in-person classroom environment.

And on a raw performance level, broadcasting video—especially many videos at once—also comes with a cost: 1) bandwidth and 2) the resources it takes to encode a user's video into a suitable format for transmitting over WebRTC.

At ChalkCast, we don't think education needs to be this way.

We are leaning into video filters and the power of machine learning to encourage students to turn on their video. We also hope to give users on low-end machinery and spotty networks some relief—without compromising on engagement.

We created various filter types that allow users to change the look and feel of their video stream, while also improving streaming performance.

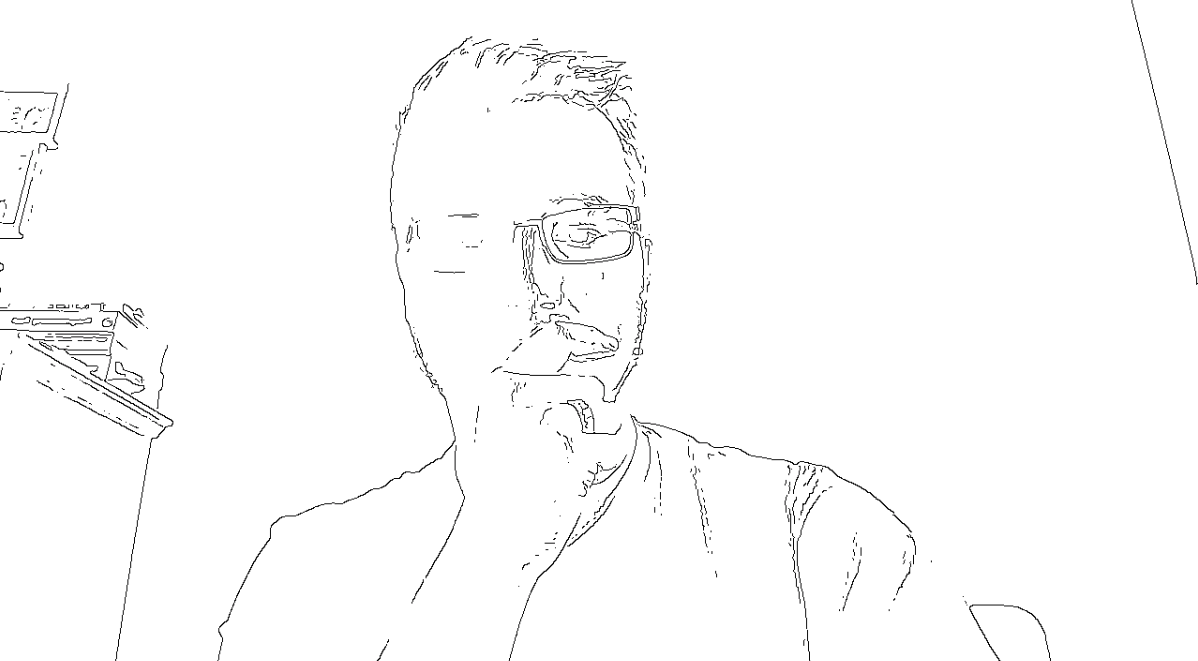

Distortion filters creatively alter a user's video feed while also obscuring the user's surroundings.

Filters like these take student video feeds a step away from reality, providing some relief to the typical formal web-conference feel, while also obscuring a user's background environment. At the same time, they give the teacher some visual indication of the student's level of engagement.

These types of filters also have the added benefit of obscuring lower-quality video artifacts in lossy video streams. In cases where, for performance reasons, we must broadcast a smaller or lower-quality video stream, doing so with a video filter on can be less noticeable.

In addition, we have been working on color filter effects.

These types of filters do not obscure the user's surroundings, but they do add an element of fun and personalization to the user's video feed.

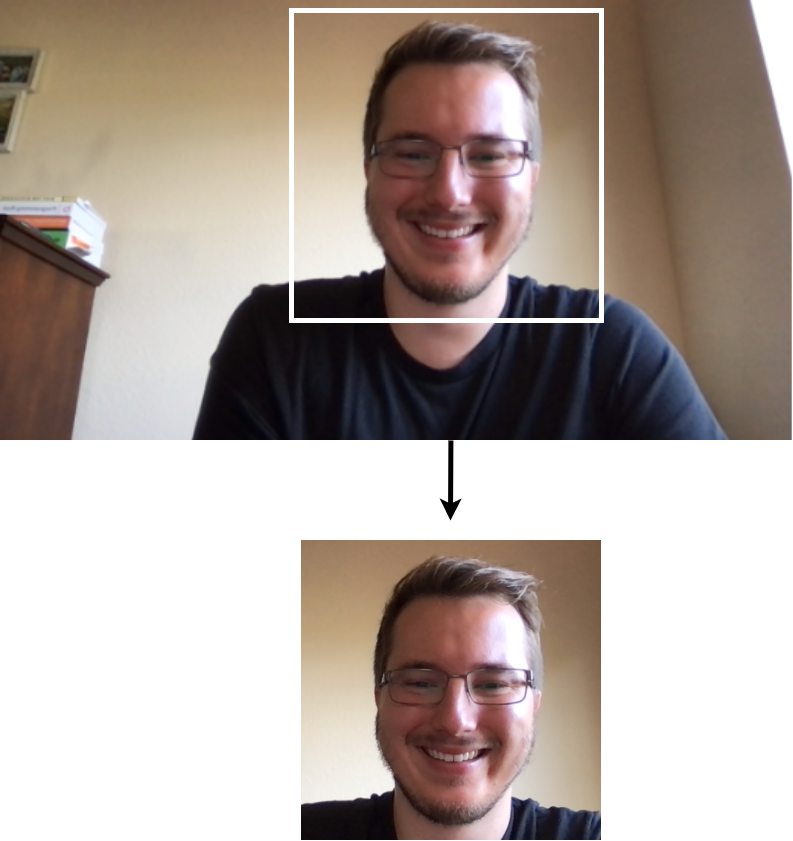

One of the areas that has the most promise in bringing performance improvements to the ChalkCast platform is the use of face detection, which enables users to send less video data overall.

Using a face detection machine learning algorithm, we can analyze where a user's face is in their video frame, then crop the user's video stream down to just the area around their face. This process alone can save 50%+ data when broadcasting the user's stream, because extraneous video information is stripped away. It can also be used in conjunction with the filters shown above.

Like the distortion filters, this has the added benefit of reducing the (potentially embarrassing or distracting) background of a user's video.

Filtering a user's video stream is hard. This is primarily due to the browser not allowing access to a user's pixel data from video frames.

There is currently no Web API that gives developers direct access to raw pixel data from a user's video, whether through an HTMLVideoElement, ImageData object, ImageBitmap object, or the user's MediaStream.

There are currently some experimental APIs in Chrome to get a VideoFrame object from a user's stream, but even the VideoFrame object does not expose its pixel data.

There are many ways of getting around this limitation, the most common of which involves rendering a user's video onto a hidden HTMLCanvasElement through a "2d" context, then requesting the pixel data from the canvas rather than the stream itself.

This technique is effective but wildly inefficient.

And even assuming that we have the pixel data packaged in a neat array, processing the pixel data itself in JavaScript (or even WebAssembly) is less than ideal.

We initially considered using JavaScript and WebAssembly for processing pixel data.

Using plain JavaScript for filtering requires the least upfront cost in complexity: it's a language every web developer knows, and it offers decent performance overall. However, JavaScript performance is inconsistent.

A problem we ran into early on was that JavaScript's Just-In-Time (JIT) compiler would do well when only using one kind of filter, but if we reused a function for a different kind of filter at runtime, performance would drop considerably (sometimes running up to twice as slow), presumably because the JIT would have to optimize the same function for different code paths, resulting in slower execution times.

Early testing also revealed that, when using JavaScript, we could hit 60fps on simple filters like Grayscale and other color filters, but anything more complex would drop the frame count as JavaScript struggled to keep up with the massive number of calculations required to process every pixel in a video frame many times per second.

Since performance was a strong consideration for us, blowing up the user's tab with JavaScript code just to filter video frames was unacceptable.

We also investigated using WebAssembly (WASM) for pixel processing.

WebAssembly is a low-level assembly-like language that is now supported in every major, modern browser. It can be compiled from languages like Rust or C++, and it offers native-like performance on the web. It can also be written by hand in WASM's text format .wat, then compiled to the binary format .wasm and optimized through a secondary optimization step. However, using WebAssembly in this way is not common.

When we first started our investigation into browser-based video filters, Rust had not yet stabilized SIMD (single instruction, multiple data) operations—currently, it's still in Rust nightly. We also had very little familiarity with C/C++, so writing WASM by hand was the quickest way to try out computing multiple pixels at once on the CPU with SIMD instructions—the closest thing you can get to multithreading in the browser without resorting to Web Workers.

The result was a bump in the time and complexity necessary to get WASM working. However, once we did, we got better performance, and JavaScript's JIT issues disappeared in the face of WebAssembly's more consistent, austere performance profile.

But even when using WebAssembly, there was no getting around the inefficiency of rendering video frames to a hidden canvas to get the pixel data, as well as the cost of doing all the work on the user's CPU.

WebGL is the browser-based version of the OpenGL (Open Graphics Library) specification. It enables writing hardware-accelerated programs that run on a user's GPU, as opposed to JavaScript and WASM, which run on a user's CPU.

It requires using a very narrowly focused rendering pipeline, optimized for processing computer graphics, which makes it less flexible, but it also provides more efficient utilities for uploading image data such as video frames to the GPU through the WebGLRenderingContext.texImage2D() API.

No more need for an intermediate canvas to get pixel data.

WebGL gives developers enormous power for doing computer graphics on the web, but it comes at the cost of complexity.

There is a significant time and skill investment in learning computer graphics, especially if developers have not already been exposed to it, and the WebGL/OpenGL API is low-level and complex. It also requires learning the OpenGL Shader Language (GLSL), which is a C-like language that can be punishing when first learning.

That being said, WebGL's performance is unbeatable when it comes to computer graphics on the web.

This is an over-simplification, but fundamentally, CPUs excel at performing sequential, deep calculations, while GPUs excel at performing massively parallel, non-intensive calculations. A typical CPU can have between 2-64 cores, which means, generally speaking, only 2-64 pieces of information can be computed at the same time, whereas a typical GPU can have something like 400-8000+ cores. These cores are not as powerful as the CPU's but they can achieve significantly greater throughput by batching unrelated calculations into groups of hundreds or thousands at once.

This is what makes the GPU good for computer graphics: A 1600x900px video contains 1,440,000 pixels. Each of these pixels must be individually sampled and filtered to produce a video filter effect. At that scale, every bit of parallelization counts!

WebGL runs on the user's GPU. It therefore reaps all the advantages (and difficulties) that come with working with the GPU.

ChalkCast, due to its nature as a high-performance video broadcasting app, tends to be CPU intensive, since it is necessary to encode a user's video stream into a format that can be sent over WebRTC using the CPU, and this process can be resource intensive. Since the ChalkCast app tends to be more CPU-bound than GPU-bound, it makes sense to try to offload some of those performance costs to the GPU.

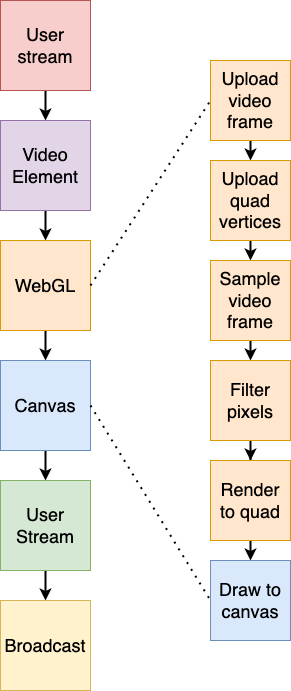

In the ChalkCast app, we sync up a user's video stream with hidden video element, using its srcObject property. We then pull frames from that video element and upload them to the GPU via WebGL's WebGLRenderingContext.texImage2D(), where we can manipulate the pixel data of the video frame with a filter shader and render it back to a canvas.

Once the filtered video is rendered to a canvas, it can be turned back into a video stream via the canvas HTMLMediaElement.captureStream() API.

This process sounds longer than using JavaScript and the plain canvas "2d" API, but it is significantly more resource-efficient.

When using WebGL, you have total control over every step of the rendering process. You control where the pixel comes from, how it gets there, and what you do with it.

That means a larger margin for error, but it also means more control over where you can optimize your code for a leaner performance profile.

We've shown only a few types of Filters you can create, but the possibilities for computer graphics on the web are virtually endless, and, by extension, so is the technology that uses it.

One last thing I want to mention is the new, up-and-coming WebGPU standard.

WebGPU is a new Web API for doing both computer graphics and general computations on the GPU. It is designed to be more flexible with better performance than either the current WebGL or WebGL2 specifications.

WebGPU is scheduled to be available in Chrome starting in September this year (2022), opening up even more possibilities for computer graphics processing on the web.

It's an exciting time for web graphics!

Interested in working for an innovative edtech company? Come work at ChalkCast.